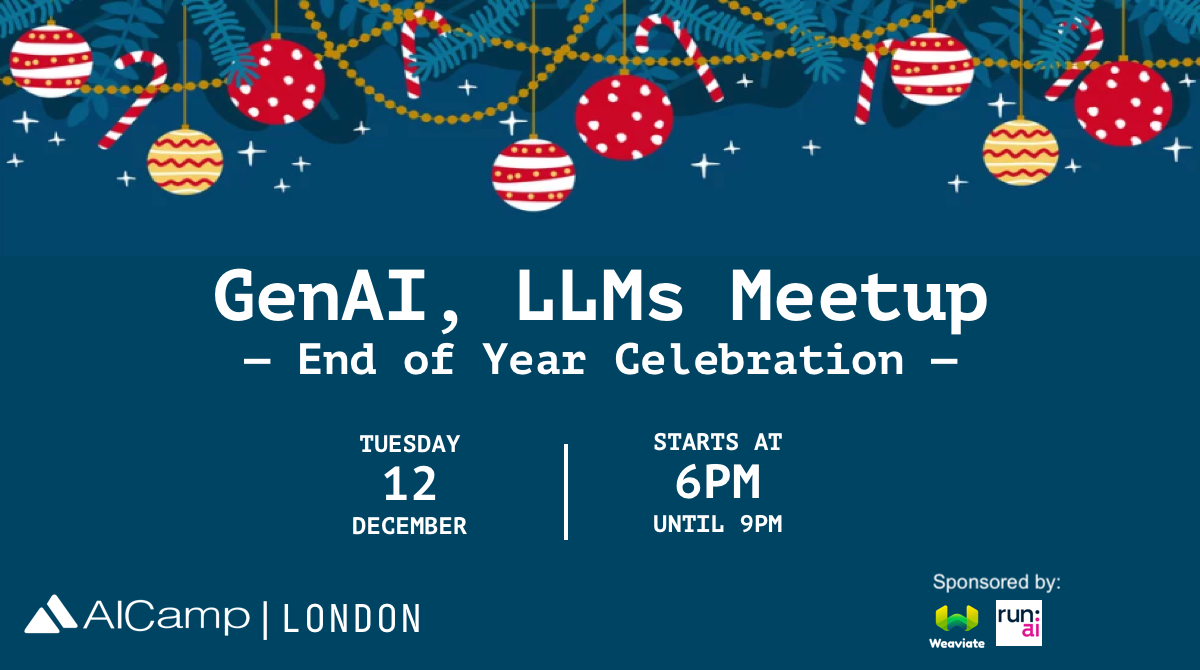

As the year winds down and the holiday spirits ramp up, we invite you to the most electrifying AI meetup (end-of-year edition). Instead of our usual scheduled talks, we will throw the most exciting holiday bash for people who build AI!

Join AI developers, ML engineers, Data scientists and practitioners to celebrate all your hard work this year.

Agenda:

* 6:00pm: Checkin, Food and Networking

* 6:45pm: Welcome/Community update

* 7:00pm: Tech Talks and Q&A

* 8:30pm: Community demo/showchase

* 9:00pm: Open discussion and mixer

Tech Talk: Why LLMs are such dumb geniuses (And how better search can help.)

Speaker: JP Hwang @Weaviate

Abstract: In this session, we’ll dive into the world of large language models (LLMs) like GPT-4 and Llama. These AI tools are superhuman readers and writers, but also often produce outputs that are divorced from reality. So, how do we channel these abilities the right way, to help them act like proper geniuses that they are?

The current state-of-the-art solution is Retrieval Augmented Generation (RAG), which combines search and retrieval with LLMs. You will learn what RAG is, and some best practices to get it working well. Whether you’re a tech enthusiast, a professional in the field, or just curious about AI, this talk will give you a fresh perspective on why search is making a big comeback in the world of artificial intelligence.

Tech Talk: Speed up delivering AI models into production

Speaker: Steve Blow @Run:ai

Abstract: GPU availability is becoming a key constraint in many AI infrastructures for many reasons. In this session, you can hear what Run:ai are seeing in the market and how they can ensure greater GPU availability and therefore speed up delivering your models into production.

Tech Talk: How "Fill-in-the-middle" code generation in LLMs works

Speaker: Tanay Mehta, Kaggle Grandmaster

Abstract: Generating code in Large Language Models is not the same as left-to-right generation of the text. In this session, we will explore how LLMs are pre-trained to generate code with no architecture changes but just a simple dataset transformation! We will cover how traditional LLMs are trained and how this technique is different along with a practical coding example on how to use this in your own LLM pre-training.

Tech Talk: Efficient Fine Tuning Methods: Exploring LoRA and Friends

Speaker: Saurav Maheshkar @Google Developer Experts

Abstract: A brief overview of recent studies on parameter efficient fine tuning methods. We will explore how LoRA and follow up works allow for significantly reducing the computational demands of fine tuning large models while maintaining metrics. We will delve into ablation studies and explore how it compares with traditional fine tuning and compression methods.

AI Community Showcase:

We have 5~10 demo desks available for the community showcases and ~5 minutes quick demo on the stage. You are invited to apply here Community Showcase

Venue:

IDEALondon, 69 Wilson St, London EC2A 2BB.

Sponsors:

- Weaviate, Run:ai

We are actively seeking sponsors to support AI developers community. Whether it is by offering venue spaces, providing food, or cash sponsorship. Sponsors will have the chance to speak at the meetups, receive prominent recognition, and gain exposure to our extensive membership base of 10,000+ local or 300K+ developers worldwide.

Community on Slack

- Event chat: chat and connect with speakers and attendees

- Sharing blogs, events, job openings, projects collaborations

Join Slack (browse/search and join #london channel)