How does the Brain/Mind (perhaps even an artificial one) work at an algorithmic level? While deep learning has produced tremendous technological strides in recent decades, there is an unsettling feeling of a lack of “conceptual” understanding of why it works and to what extent it will work in the current form.

The goal of the workshop is to bring together theorists and practitioners to develop an understanding of the right algorithmic view of deep learning, characterizing the class of functions that can be learned, coming up with the right learning architecture that may (provably) learn multiple functions, concepts and remember them over time as humans do, theoretical understanding of language, logic, RL, meta learning and lifelong learning

.

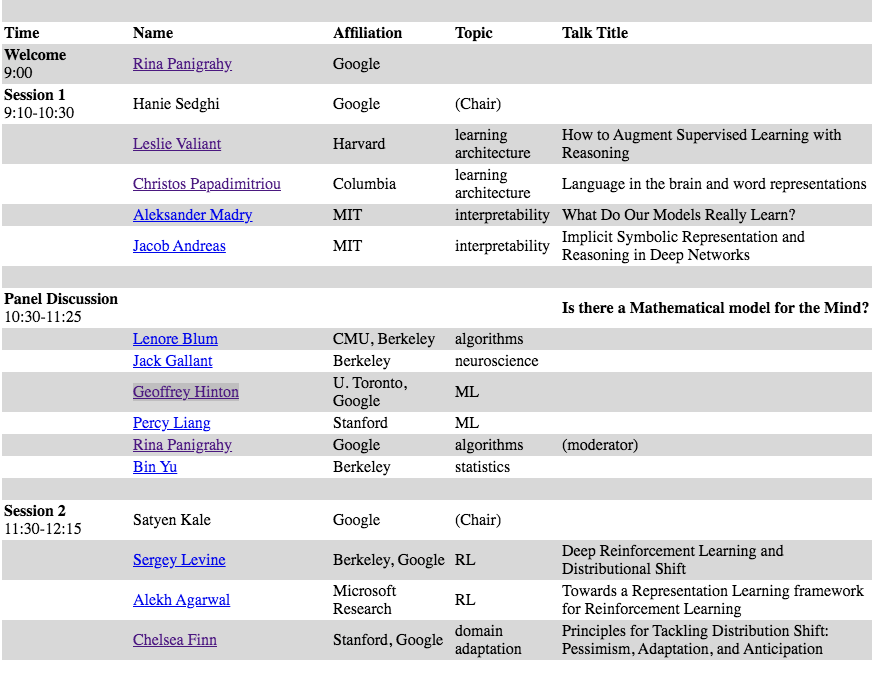

The speakers and panelists include

Turing award winners Geoffrey Hinton, Leslie Valiant, and Godel Prize winner Christos Papadimitriou..

Topics includes:

* Learning architecture and interpretability

* algorithms and neuroscience

* ML and RL

* Language and learning theory

Featured Speakers:

* Geoffrey Hinton, from University of Toronto and Google.

* Leslie Valiant, from Harvard University

* Christos Papadimitriou, from Columbia University.

* Aleksander Madry, from MIT.

* Jacob Andreas, from MIT.

* Percy Liang, from Stanford.

* Bin Yu, from Berkeley.

* Lenore Blum, from CMU, Berkeley.

* Alekh Agarwal, from Microsoft Research.

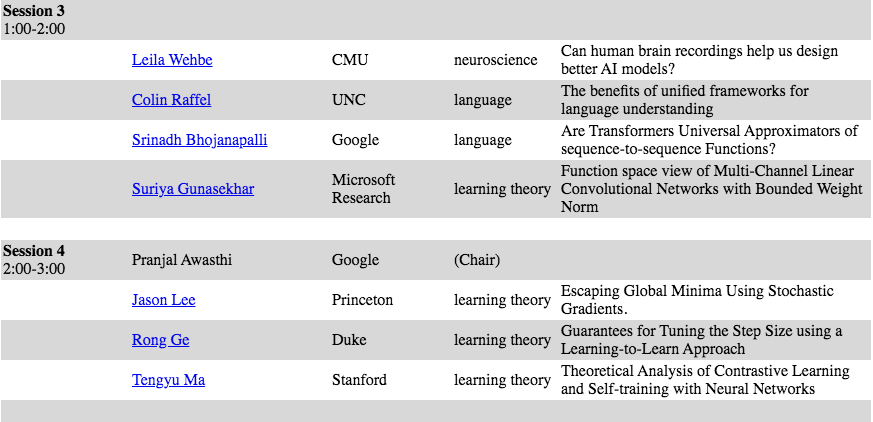

* Jason Lee, from Princeton University.

* and 20 more

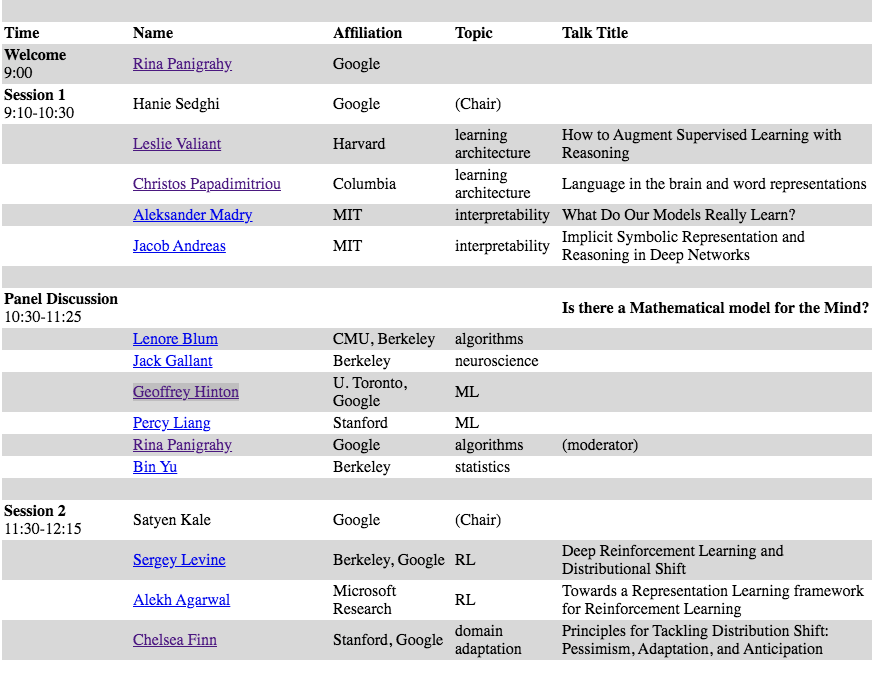

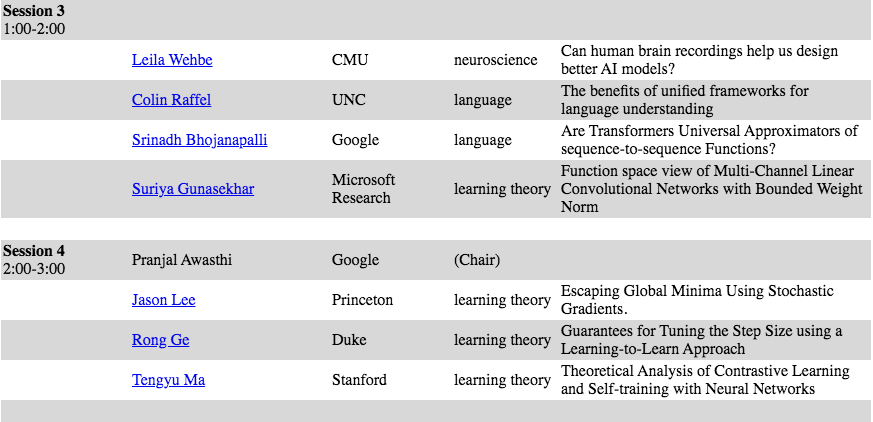

Agenda:

Workshop Website

This workshop is hosted by our partners, Google research.

Details

* Geoffrey Hinton, from University of Toronto and Google.

* Leslie Valiant, from Harvard University

* Christos Papadimitriou, from Columbia University.

* Aleksander Madry, from MIT.

* Jacob Andreas, from MIT.

* Percy Liang, from Stanford.

* Bin Yu, from Berkeley.

* Lenore Blum, from CMU, Berkeley.

* Alekh Agarwal, from Microsoft Research.

* Jason Lee, from Princeton University.

* and 20 more